Parents Claim Addictive Algorithms Caused Their Child's Death: Nazario v. ByteDance

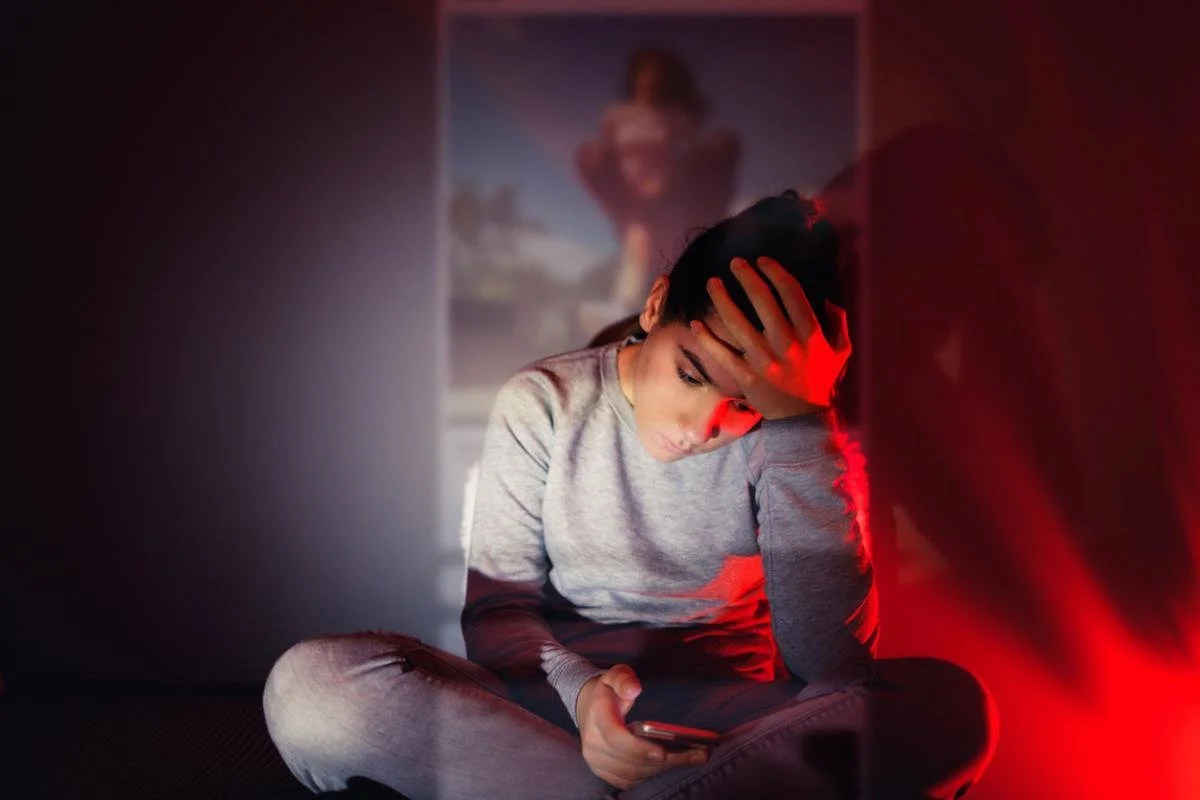

/A New York Supreme Court complaint (Index No. 151540/2024) accuses TikTok's parent, ByteDance, and related social-media firms of designing "addictive" features that target minors, promote dangerous challenges, and allegedly caused a boy's death.

The Case: Norma Nazario v. ByteDance Ltd. et al.

The Court: NY Supreme Court

The Case No. 151540/2024

The Plaintiff: Nazario v. ByteDance

Norma Nazario, individually and as representative of her late child Zackery's estate, filed a wrongful death lawsuit contending that the platforms exploited children's neuro-vulnerabilities, lured Zackery into compulsive use, and pushed self-harm content that led directly to his fatal actions.

The Defendant: Nazario v. ByteDance

According to the complaint, the defendant, ByteDance (and other affiliated social media platforms/apps):

Optimize apps for "retention" and "time spent," while valuing engagement over safety.

Actively nudge minors to create content or "go live" with content.

Algorithmically amplify dangerous challenges while withholding adequate warnings.

The Case: Nazario v. ByteDance

The case was put before the New York Supreme Court and listed multiple allegations:

Defendants deliberately leverage the neuro-developmental vulnerabilities of minor users to "optimize" time spent and retention results.

Algorithms pushed the plaintiff's son, Zackery, toward "exceedingly dangerous challenges and videos," which directly caused the child's fatal actions.

Defendants knew their products were addictive, failed to redesign or provide adequate warnings, and even "co-created" content through prompts, music curation, and "go live" nudges.

The Main Question: Nazario v. ByteDance

Did ByteDance's product designs, recommendation algorithms, and failure to warn or safeguard young users create a defective, deceptively marketed product that caused Zackery's death—and, if so, what liability attaches under New York tort and consumer-protection law?

FAQ: Nazario v. ByteDance

Q: What makes the suit a product liability case rather than simple negligence?

A: The complaint frames TikTok as a consumer product with design defects (addictive features, dangerous content amplification) and inadequate warnings, invoking strict liability principles normally applied to physical goods.

Q: How do deceptive-practice claims (GBL §§ 349 & 350) fit in?

A: Nazario says defendants misrepresented TikTok's safety, luring families to trust a platform allegedly engineered to maximize engagement at the expense of minors' mental and physical health.

Q: What "dangerous challenges" are cited?

A: While specific titles are under seal, the pleading says the For You Page pushed viral stunts encouraging self-harm and risky behavior that Zackery ultimately attempted.

Q: Could Section 230 of the Communications Decency Act shield the defendants?

A: Plaintiffs argue the platforms acted as designers and co-creators of content through algorithmic amplification, prompts, and curated music, placing their claims within the product-liability sphere where Section 230 immunity is narrower.

Q: What happens next procedurally?

A: The court will rule on the pending motion (MS #3). If claims survive, discovery will address internal design documents, safety studies, and data on under-18 engagement, potentially informing settlement or trial.

If you need to file a wrongful death lawsuit, and you have questions, please get in touch with Blumenthal Nordrehaug Bhowmik DeBlouw LLP. Knowledgeable wrongful death attorneys are ready to help in various law firm offices in Riverside, San Francisco, Sacramento, San Diego, Los Angeles, and Chicago.